Creating custom video effects for government digital signage is a complex task that requires an understanding of specific requirements, in-depth knowledge of video processing, and programming skills. This article provides programmers with a step-by-step guide to developing and optimizing video effects for government needs.

Understanding Digital Signage Requirements

Government-Specific Needs

Digital signage in government agencies must meet unique requirements. It may be used to broadcast emergency notifications, visualize data, or showcase public services. System reliability and resilience to failure are important considerations, especially in mission-critical applications such as emergency management centers.

Technical Specifications

Video effects must meet strict technical requirements. For example, support for resolutions of 4K and higher is becoming standard, especially for large displays in public spaces. Compatibility with different hardware platforms, such as specialized media servers or single-board computers like the Raspberry Pi, must also be considered.

Accessibility Standards

Compliance with accessibility standards such as WCAG is an important aspect. This includes providing sufficient color contrast, adding subtitles, or alternative text descriptions for visual content. For example, text transition effects should be smooth so that they can be perceived by people with visual impairments.

Video Processing Fundamentals

Frame Manipulation Techniques

Frame manipulation techniques involve changing frame parameters such as brightness, contrast, and saturation. For example, convolution filters applied to each frame can create a blur effect.

Color Space Transformations

Color spaces, such as RGB and YUV, play a key role in video processing. For example, converting from RGB to YUV can reduce file size without sacrificing quality for human perception. This is especially important for signage that broadcasts real-time content.

Real-Time Processing Considerations

Real-time video processing requires minimizing latency. For example, using technologies such as Vulkan or DirectX can reduce rendering time for complex effects like dynamic lighting or 3D animation.

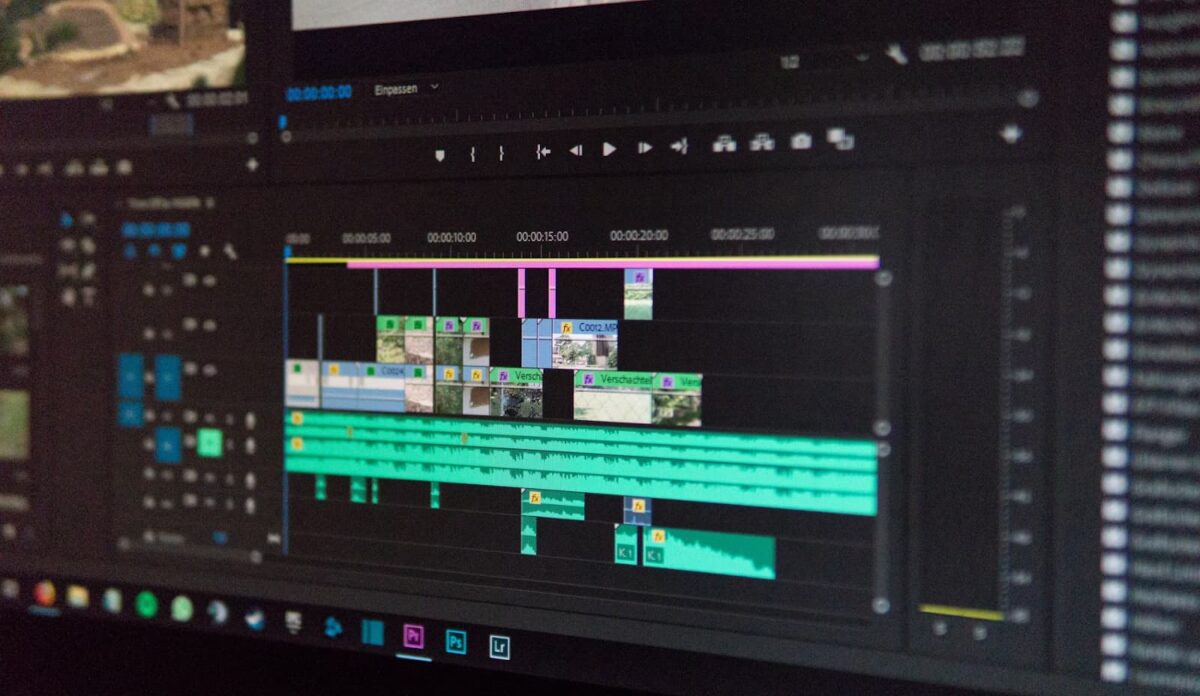

Custom Effect Development

Shader Programming

Shaders are the basis for creating visual effects. For example, a fragment shader can be used to create the “pixelization” effect popular in modern digital signage. Using HLSL or GLSL allows for high performance and flexibility.

Particle Systems

Particle systems are used to create effects such as snow, rain, or fire. For example, in government applications, such effects can animate and emphasize important notifications. Controlling particle systems requires careful tuning of parameters such as the speed, direction, and lifetime of particles.

Transition Effects

Transitions between slides or video clips should be smooth and visually appealing. For example, a “dissolve” effect can be realized through linear interpolation of alpha channel values. This creates a sense of continuity and enhances the user experience.

Performance Optimization

GPU Acceleration

GPU hardware acceleration allows complex effects to be processed with minimal CPU overhead. Using CUDA or OpenCL libraries can significantly speed up computational operations.

Memory Management

Efficient memory management is critical. For example, buffering frames helps minimize latency, and removing unused textures frees up resources. This is especially important for systems with limited RAM.

Render Pipeline Optimization

Render pipeline optimization includes minimizing the number of rendering calls and using batching techniques. This allows for faster rendering of complex scenes and reduces system load.

Integration with Content Management Systems

API Development

Creating APIs allows you to integrate custom effects into content management systems (CMS). For example, an API can provide functions to customize effects, such as brightness or animation speed, through the CMS user interface.

Plugin Architecture

Plugin architecture allows you to extend the functionality of a CMS without having to modify the underlying code. Developing a plugin to add transition effects, for example, makes the system more flexible and scalable.

Version Control and Updates

Using version control systems such as Git allows you to track changes to your code and easily roll back to previous versions. Regular updates ensure compatibility with new CMS versions and provide bug fixes.

Testing and Quality Assurance

Automated Testing Frameworks

Automated testing helps detect errors in code at early stages. Using tools such as Selenium or Appium allows you to test the interaction of video effects with the user interface.

Performance Benchmarking

Performance testing includes measuring render times, memory usage, and CPU utilization. For example, comparing performance on different devices helps optimize code for a wide audience.

Compatibility Testing

Compatibility testing involves checking how effects work on different platforms and devices. Effects designed for 4K displays should display correctly on lower-resolution devices.

FAQ

What programming languages are best suited for custom video effect development?

The best-suited languages are those that support graphics, such as C++, Python (with OpenCV libraries), and JavaScript (using WebGL).

How to ensure video effects meet government accessibility guidelines?

Follow WCAG standards, including the use of high-contrast colors and text alternatives. For example, text should be readable even against dynamic backgrounds.

What are the key considerations for creating effects that work across different display sizes?

Use adaptive design, scalable textures, and relative units of measure. For example, the resolution of the effect should automatically adjust to the screen parameters.